I am a huge Excel fan, because it allows easy data transformation and its flexibility is second to none, despite its complexity. But I also use other tools, depending on the requirements.

Many users instead only use Excel, with no possible alternative. Good or bad, this is the norm in many organisations and trying to change this habit too early while trying to push new concepts or ideas only puts strain on these users, raising barriers and potentially preventing the very change we are pursuing.

That is where PowerQuery comes to the rescue. Ironically enough, I discovered it by accident trying to help my partner with some stuff from her work (she is a heavy Excel user) – PowerQuery is a very powerful data analysis engine (it goes hand to hand with PowerPivot, another Excel Data Modelling tool I am really fond of!) that, in a nutshell and from a developer point of view, enables database-like querying and reporting scenarios.

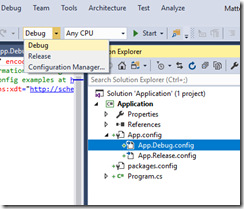

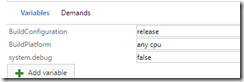

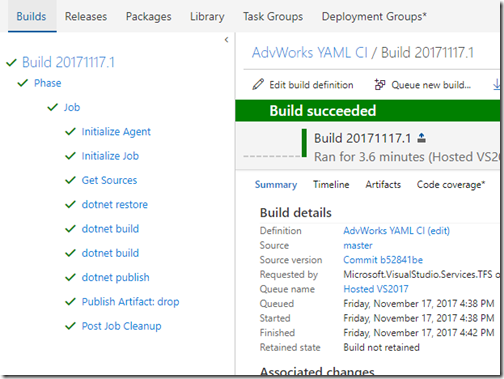

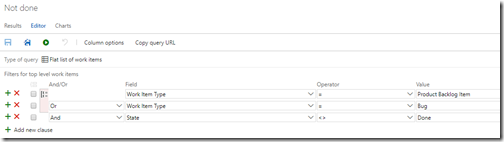

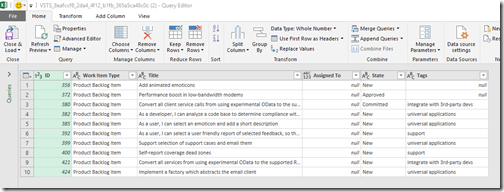

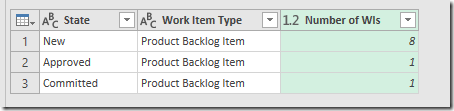

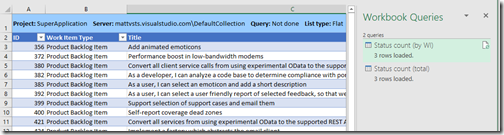

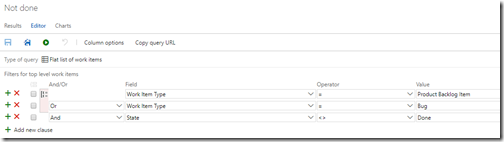

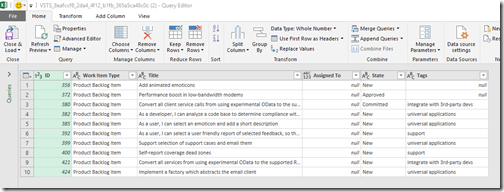

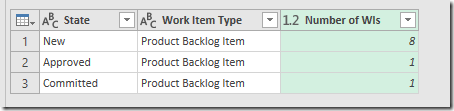

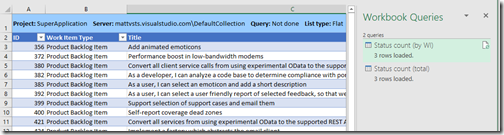

So what can you do with it? Well, let’s take a very easy example: you have a TFS/VSTS query which returns all the non-done PBIs in a backlog, and you want to report on this query so you will know how many Work Items you have in a certain state, but without using TFS or VSTS at all.

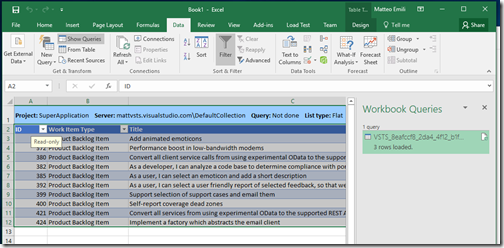

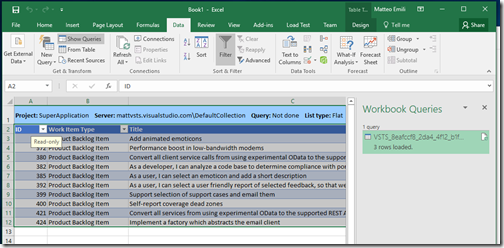

That is where Excel comes easily to the rescue: you can connect it to TFS/VSTS with the Team Add-in, downloading the raw data from the query you saved there:

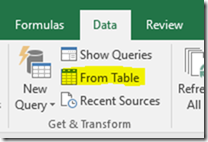

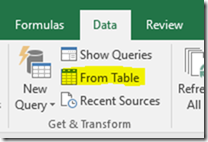

Select the raw data you want to use and from the Data ribbon, select From Table. Excel will automatically recognise the data source you want to use, if you don’t select data beforehand you’d have to input the range manually.

PowerQuery now kicks in:

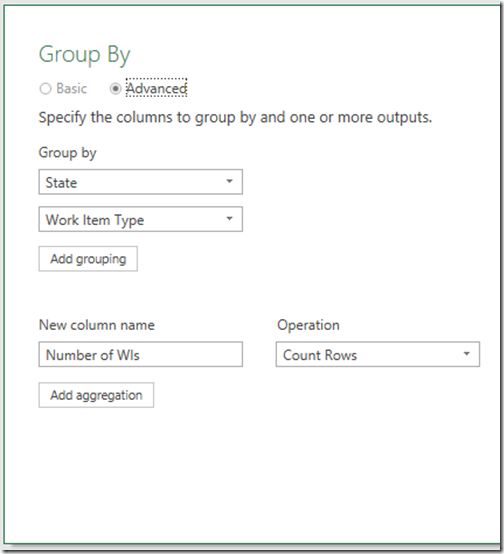

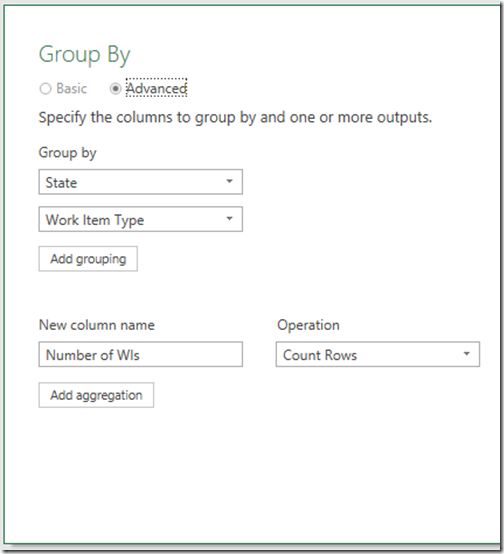

What we want to do is pretty easy and straightforward, so we are going to use Group By:

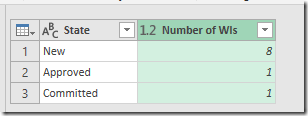

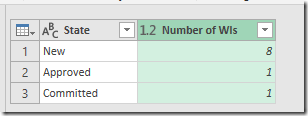

A basic group by works well here:

If you use an advanced one you can group by based on multiple columns. If you have bugs as well as PBIs as requirements, that’s what you want:

Now, if you Close & Load, you are done. How is this useful in any way?

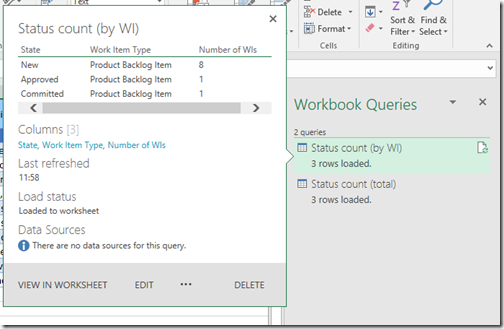

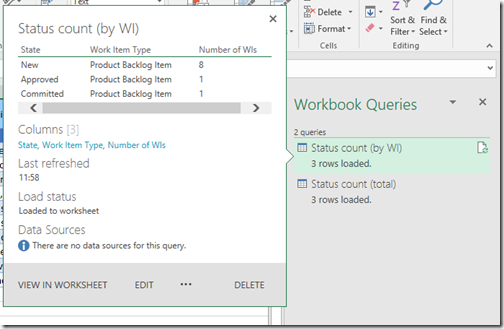

Easy: this query is going to show on the side of your spreadsheet:

Click on the Query you created, and you will be immediately shown the result:

or

or

Moreover, it is quite dynamic.

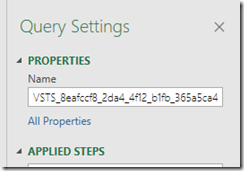

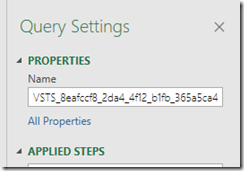

Going deeper on it, the name isn’t cool at all – VSTS_<GUID> doesn’t say much. You can change it in the Query Editor:

Underneath you can see the Applied Steps – that is where things get interesting:

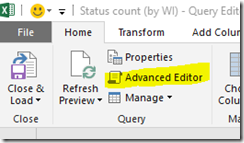

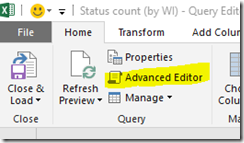

It is the visual representation of all the data transformation you applied. If you want to access these steps and change them, click on the Advanced Editor:

You will get the actual PowerQuery raw language (it is a functional language called M by the way  ):

):

This is where you can start creating your custom transformations, leading to dynamic custom reports based on TFS/VSTS data.

I really find it cool and fascinating to be fair, bringing together such different user requirements and scenarios – without mentioning that you can up your percentage of Excel knowledge by a notch, which is always a great skill to master